Earlier this week, AWS launched DeepComposer , a set of web-based tools for learning about AI to make music and a $99 MIDI keyboard for inpu...

Earlier this week, AWS launched DeepComposer, a set of web-based tools for learning about AI to make music and a $99 MIDI keyboard for inputting melodies. That launch created a fair bit of confusion, though, so we sat down with Mike Miller, the director of AWS’s AI Devices group, to talk about where DeepComposer fits into the company’s lineup of AI devices, which includes the DeepLens camera and the DeepRacer AI car, both of which are meant to teach developers about specific AI concepts, too.

The first thing that’s important to remember here is that DeepComposer is a learning tool. It’s not meant for musicians — it’s meant for engineers who want to learn about generative AI. But AWS didn’t help itself by calling this “the world’s first machine learning-enabled musical keyboard for developers.” The keyboard itself, after all, is just a standard, basic MIDI keyboard. There’s no intelligence in it. All of the AI work is happening in the cloud.

“The goal here is to teach generative AI as one of the most interesting trends in machine learning in the last 10 years,” Miller told us. “We specifically told GANs, generative adversarial networks, where there are two networks that are trained together. The reason that’s interesting from our perspective for developers is that it’s very complicated and a lot of the things that developers learn about training machine learning models get jumbled up when you’re training two together.”

With DeepComposer, the developer steps through a process of learning the basics. With the keyboard, you can input a basic melody — but if you don’t have it, you also can use an on-screen keyboard to get started or use a few default melodies (think Ode to Joy). From a practical perspective, the system then goes out and generates a background track for that melody based on a musical style you choose. To keep things simple, the system ignores some values from the keyboard, though, including velocity (just in case you needed more evidence that this is not a keyboard for musicians). But more importantly, developers can then also dig into the actual models the system generated — and even export them to a Jupyter notebook.

For the purpose of DeepComposer, the MIDI data is just another data source to teach developers about GANs and SageMaker, AWS’s machine learning platform that powers DeepComposer behind the scenes.

“The advantage of using MIDI files and basing out training on MIDI is that the representation of the data that goes into the training is in a format that is actually the same representation of data in an image, for example,” explained Miller. “And so it’s actually very applicable and analogous, so as a developer look at that SageMaker notebook and understands the data formatting and how we pass the data in, that’s applicable to other domains as well.”

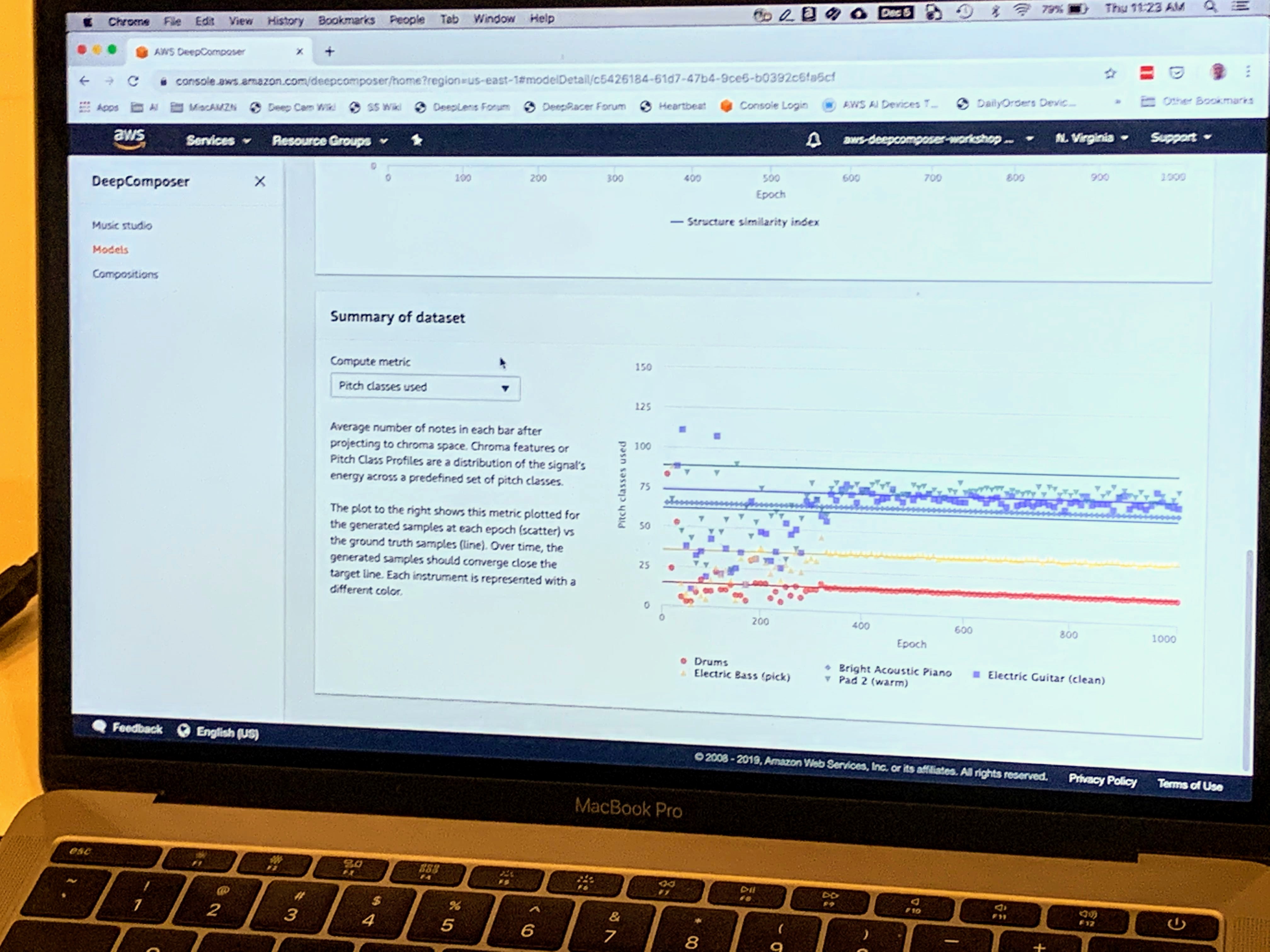

That’s why the tools expose all of the raw data, too, including loss functions, analytics and the results of the various models as they try to get to an acceptable result, etc. Because this is obviously a tool for generating music, it’ll also expose some of the data about the music, like pitch and empty bars.

“We believe that as developers get into the SageMaker models, they’ll see that, hey, I can apply this to other domains and I can take this and make it my own and see what I can generate,” said Miller.

Having heard the results so far, I think it’s safe to say that DeepComposer won’t produce any hits soon. It seems pretty good at creating a drum track, but bass lines seem a bit erratic. Still, it’s a cool demo of this machine learning technique, even though my guess is that its success will be a bit more limited than DeepRacer, which is a concept that is a bit easier to understand for most since the majority of developers will look at it, think they need to be able to play an instrument to use it, and move on.

Additional reporting by Ron Miller.

from TechCrunch https://ift.tt/388OQRP

via IFTTT

COMMENTS